- AI, But Simple

- Posts

- A Deep Dive into Modern Transformers: Optimizing Attention

A Deep Dive into Modern Transformers: Optimizing Attention

AI, But Simple Issue #85

Hello from the AI, but simple team! If you enjoy our content (with 10+ custom visuals), consider supporting us so we can keep doing what we do.

Our newsletter is not sustainable to run at no cost, so we’re relying on different measures to cover operational expenses. Thanks again for reading!

A Deep Dive into Modern Transformers: Optimizing Attention

AI, But Simple Issue #85

Artificial intelligence has been around since the 70s, but a team of researchers at Google published a paper in 2017 that transformed the landscape: Attention Is All You Need.

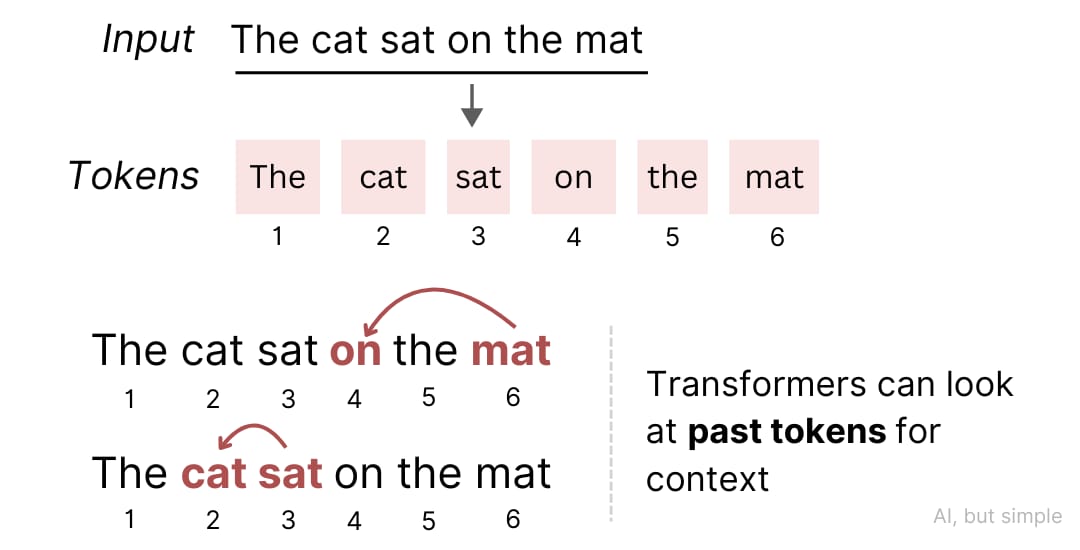

With the adoption of the transformer architecture came the concept of self-attention, a strategy of predicting tokens that are fed into the transformer.

The main idea of these transformers is very widely studied. Feed in a chain of tokens, process them using attention, then obtain a probability distribution over the next possible tokens to sample from.

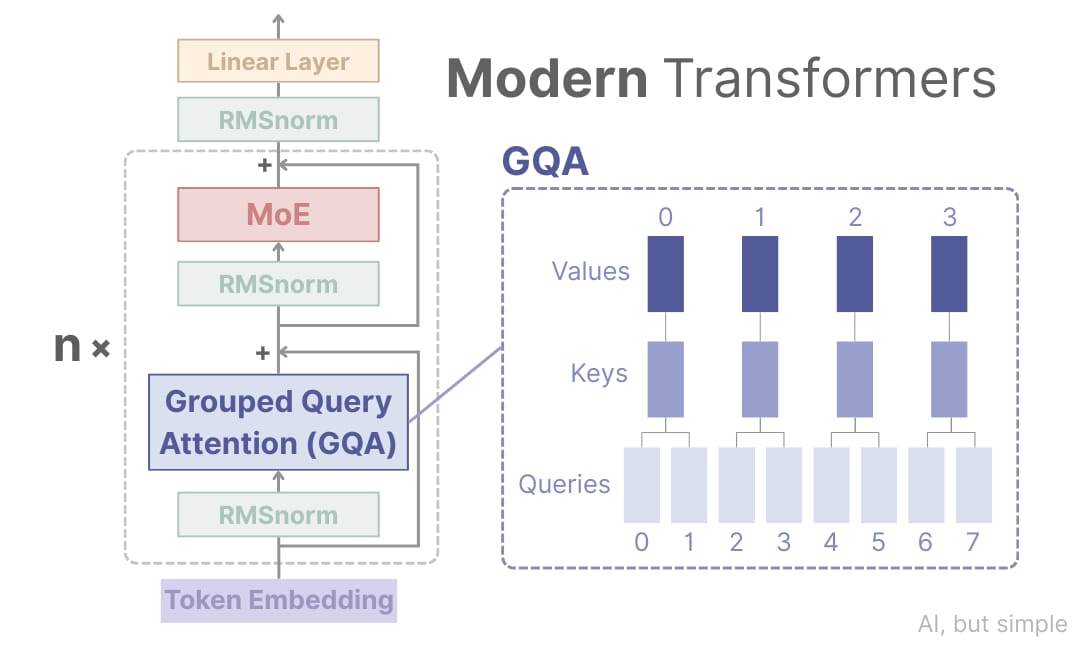

However, modern transformers used in LLMs like GPT-5, Gemini 3 Pro, and DeepSeek R1 have undergone significant updates and optimizations. Specifically, the attention mechanism has been heavily updated.

In this issue, we’ll dive deep into attention and its modern variants, showing exactly how they improve performance.

A Review of “Regular” Attention

Let’s trace the way that self-attention may be calculated and utilized. It can be split up into two different parts: the training phase and the generation phase (inference).

The Training Phase

Let’s go over the training process of a very basic transformer. We first select a corpus (a body of text, e.g., Wikipedia) to train the model.