- AI, But Simple

- Posts

- Agentic AI, Simply Explained

Agentic AI, Simply Explained

AI, But Simple Issue #59

Hello from the AI, but simple team! If you enjoy our content, consider supporting us so we can keep doing what we do.

Our newsletter is no longer sustainable to run at no cost, so we’re relying on different measures to cover operational expenses. Thanks again for reading!

Agentic AI, Simply Explained

AI, But Simple Issue #59

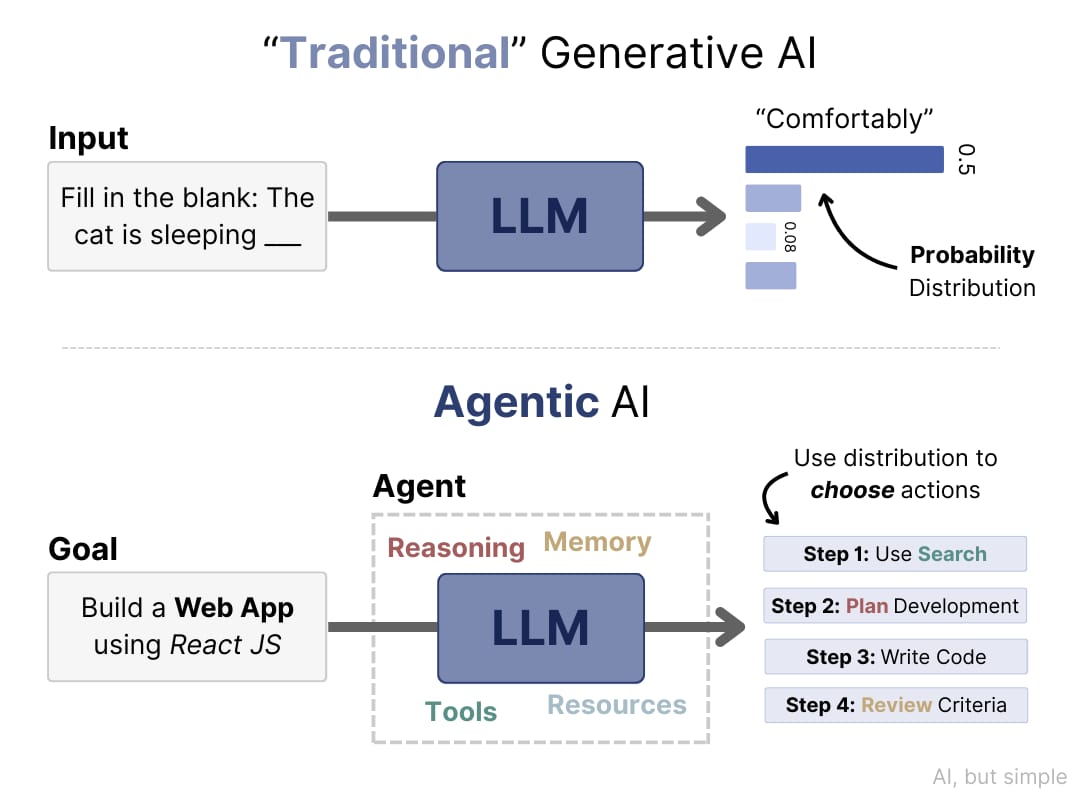

Agentic AI represents the current paradigm shift from AI that only responds to AI that acts on those responses.

To illustrate, while a tool like ChatGPT can help you write an email, agentic AI can go one (or many) steps further. An agent can research your recipient, create a draft, access tools relevant to your situation (perhaps your calendar app), and finally send the email.

The punchline? All you need is one prompt.

Imagine you’re a college student trying to manage your finances. Traditional generative AI could provide general explanations for any questions that you may ask and even provide suggestions, given a thorough verbal prompt of your situation.

However, with AI agents, using only the command of “improve my personal finances,” the agent would identify the different aspects of your individual financial situation that need attention and break them down into actionable steps to achieve the assigned task.

The agent could, for instance, analyze your spending habits across accounts, research investments that you can make, identify forgotten subscription services, and even readjust your strategies based on your current situation. Given proper access, it would do all of this without further human intervention.

Supporting us by purchasing a PRO membership will get you:

Exclusive Hands-on Tutorials With Code

Full Math Explanations and Beautiful Visualizations

Ad-Free Reading for an Uninterrupted Learning Experience

Reactive or Proactive?

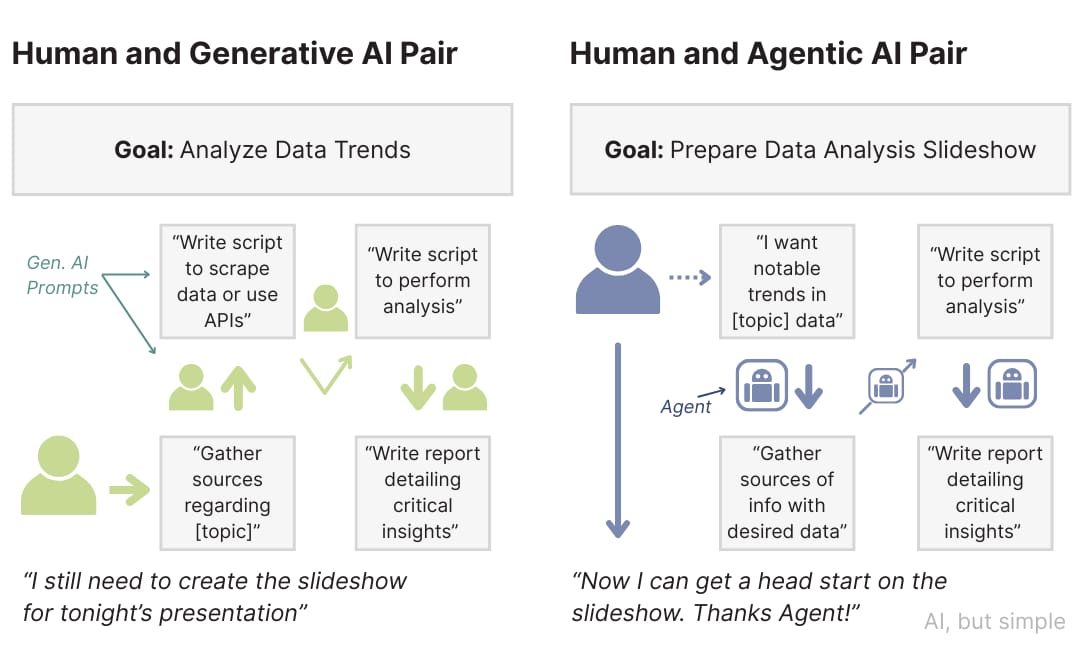

Traditional generative AI feels more like managing, while agentic AI is more like delegating. Traditional generative AI is reactive, requiring the user to guide it step-by-step through more complicated tasks—the user is actively involved in directing each step of the task.

Agentic AI is proactive, meaning it works on its own: the user gives the agent a goal, and it works independently to figure out how to get there using the tools and resources the user provides.

The user no longer directs the next move; rather, the user sets the objective and completely delegates the task to the system, trusting it to figure out execution.

This shift from reactive assistance to proactive execution is the backbone of why every major tech company is racing to build agentic systems.

How Agentic AI Works

Agentic AI operates much like a skilled employee: the agent assesses the given situation, spins up a plan, follows through, and learns from the results. It completes complex plans using its systematic approach.

This problem-solving is similar to that of humans, except that it works within software with digital tools and data. Agentic AI brings to reality what was promised in Apple’s Siri voice assistant 14 years ago.

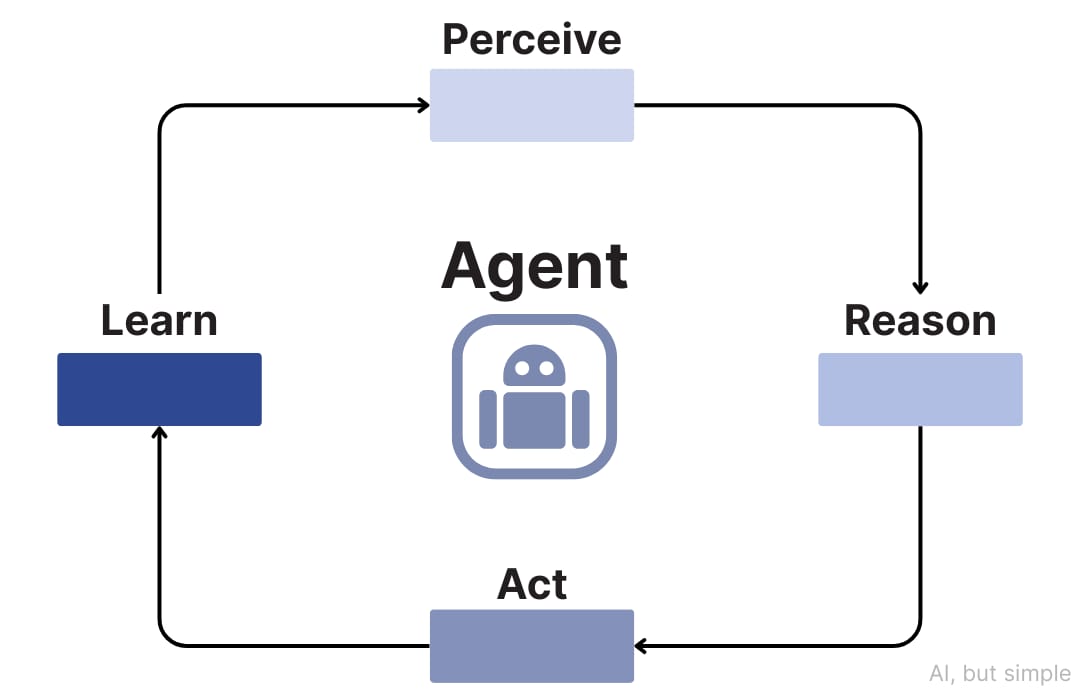

Agentic AI systems follow a continuous 4-step cycle:

Perceive: Agentic AI collects data from its digital surroundings, such as databases, APIs, and other information. It surveys this data to understand the “current state of things” before writing up a plan.

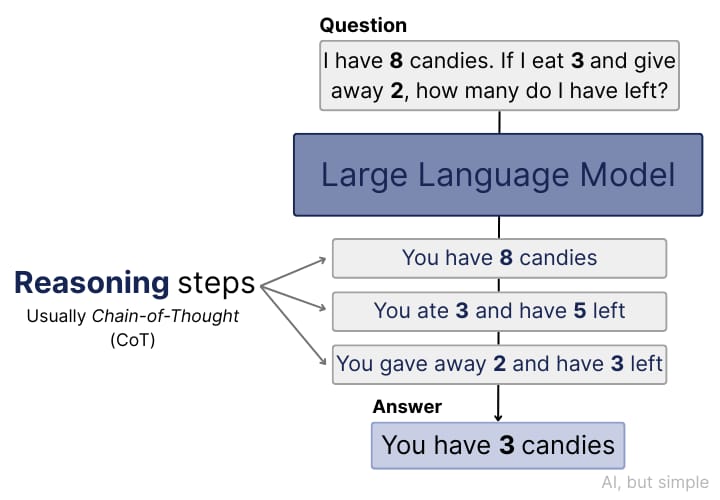

Reason: Using the context it has gathered, the agent begins planning its next move. Advanced agents may deploy methods like Monte Carlo Tree Search (MCTS) or Chain of Thought (CoT) reasoning to break down high-level goals into executable subtasks. Based on token probabilities, it will then pick out the most effective next step.

Act: The agent will use the available tools to work toward completing the assigned task. For example, it might make API calls, run programs, and generate content. It will also generate a concrete internal output, which it will later assess.

Learn: The agent will evaluate the results against its goal and reflect on the state of its progress. If satisfied, the agent will return its work to the user; otherwise, it will re-enter the four-step process again until it reaches an “acceptable” finished product.

The edge agentic AI possesses over traditional software or automations is in the decision-making process “behind the scenes.” When an agent runs into an obstacle—a website is down or a tool fails—it will backtrack, adjust, and choose an alternative approach.

Although AI agents seem quite autonomous, it is important to recognize that agentic AI isn’t granted complete autonomy. They operate within defined boundaries, typically in the range of 10-15 iterations. This prevents the agents from getting caught in infinite cycles if left unrestricted.

The Reasoning Engine

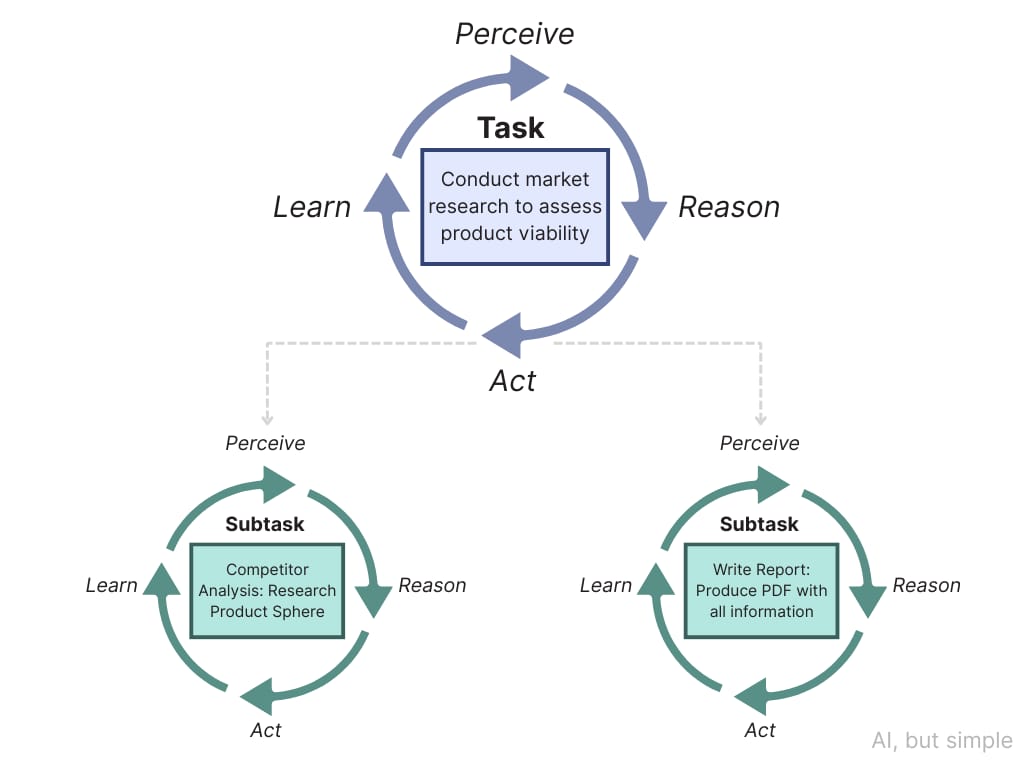

You can visualize the cyclic process as one large loop that works to complete the overall goal. But this main loop is not the only one at play. For each subtask, the agent creates smaller loops, and these loops work on their own until the subtask is completed.

The main loop keeps track of their progress (like a progress bar), checking how each subtask is going. In this way, tasks and loops are nested inside each other.

For example:

Major Task: Conduct market research to assess product viability

Subtask 1: Competitor Analysis

Research product sphere and environmental factors

Subtask 2: Write Report

Produce PDF with all information in organized report

These subtasks would undergo the process of “Perceive, Reason, Act, and Learn” simultaneously with the major task.

Within these loops, decisions and the agent’s “thinking” occur in steps. This step-by-step process is crucial because it allows the agent to "remember" previous actions and results.

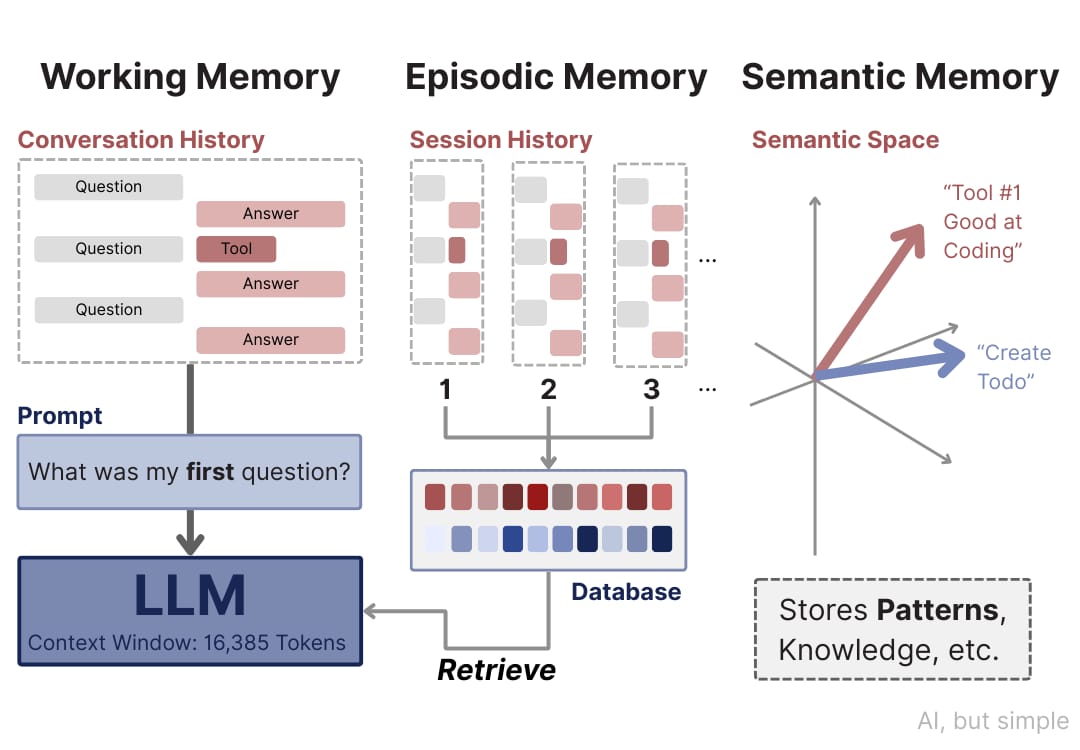

This ability to retain information about its progress is often called state management, and it is the effective memory of the agent. There are many types of memory used in agentic systems, but notable ones include the working memory, episodic memory, and semantic memory.

The working memory is essentially the short-term memory; the agent remembers the context for the current task and its subtasks but loses information about previous tasks. It is usually implemented as a context window.

Using working memory, the agent performs each major task independently. The drawback here is that it may overwrite a previous request that the user made in order to fulfill the most recent task (often seen in programming).

Working memory is very useful for short-term tasks and is utilized in many chatbots.

Episodic memory is more of a longer-term memory; the agent retains information on previous tasks, similar to how a human may remember specific procedures they learned many months or years ago.

This is implemented by keeping a record of key events or successes, which the agent will draw upon in the future.

Semantic memory is similar to the concept of semantic space, where information is stored in a high-dimensional space, and ideas are represented in this space mathematically.

Semantic memory finds patterns of how tools are used and can apply these patterns across a broad range of tasks. Semantic memory also stores general facts and information, rather than specific events.

These step-by-step processes during the agentic loop denote the specific decision points that the agent stops at. The reasoning model displays these decision points as JSON outputs (these are generally recognizable formats for LLMs to train on), which the agent uses to inform future decisions.

Think of the LLM as the “brain” and the actual computational programs as the “body” of the agent, which when combined form a powerful unit that goes through each decision point.

The decision points act as natural resting stops where the system pauses reasoning to act and observe results. With the new data, it will resume reasoning.

{

"plan": {

"goal": "market_analysis",

"steps": [

{"action": "research", "target": "competitors"},

{"action": "analyze", "input": "research_results"}

]

}

}The Paradox of Discrete Steps

Here's the interesting part: agentic AI appears to think step-by-step, but LLMs don’t actually “understand” the words they produce. How can the LLM that writes the JSON, the model that predicts the next word, reason in a step-by-step manner?

{

"reasoning": "I need competitor data first, then analysis",

"action": "web_search",

"parameters": {

"query": "tech startup pricing 2024"

}

}Above, the LLM is not actually “deciding” to search. It figures out that this pattern of words together leads to successful output, which leads it to infer that searching the web is the best path forward.

This is trained through a technique called Reinforcement Learning through Human Feedback (RLHF), where positive outcomes are rewarded, which the bot tries to replicate in future generations.

So it’s not actually planning ahead; rather, it reproduces token patterns that work, enabling the agent to know what step it should take next—its semantic memory.

The agent has seen this example millions of times during training, so it naturally generates these logical-seeming JSON files even without the physical grasp of what it means.

The paradox lies in the fact that this pseudo-reasoning is able to produce genuine results.

The Current Environment and Future Outlook

Agents are popping up with rapid frequency. GitHub Co-Pilot is an excellent example of this—Co-Pilot has access to your entire repository and is able to modify code following the user’s prompt or goal. Claude has also rolled out a program to build agents with its software for your needs.

Agents have also been integrated into many applications already, including customer service and research assistants, which help users make their work more efficient and standardized.

However, there is much work to be done. For one, agents are still monitored. For instance, customer service chatbots are monitored in order to maintain quality support and prevent inappropriate comments from being made.

This monitoring is still one of the biggest limitations of agentic AI. It is important to keep agents operating within the defined boundaries set by the user for ethical and performance reasons.

A recent study by CMU actually shows that agentic AI is not as prevalent as we think, as the AI is not as developed as we once thought.

They tested the AI relative to benchmark tasks like searching the web, running applications, and communicating with coworkers, and the study found that the best agent at the moment can only successfully complete 30% of tasks autonomously.

The bottom line is that most simple tasks can be handily dealt with, but “longer horizon tasks are still beyond the reach of current systems.” (Xu et al., 2025).

While agentic AI may have its limitations in real-world use, it presents a vast landscape of new technology that can be used to make menial tasks more efficient and our productivity higher.

Here’s a special thanks to our biggest supporters:

Sushant Waidande

If you enjoy our content, consider supporting us so we can keep doing what we do. Please share this with a friend!

Want to reach 50000+ ML engineers? Let’s work together:

Feedback, inquiries? Send us an email at [email protected].

If you like, you can also donate to our team to push out better newsletters every week!

That’s it for this week’s issue of AI, but simple. See you next week!

—AI, but simple team