- AI, But Simple

- Posts

- KL Divergence, Simply Explained

KL Divergence, Simply Explained

AI, But Simple Issue #63

Hello from the AI, but simple team! If you enjoy our content, consider supporting us so we can keep doing what we do.

Our newsletter is no longer sustainable to run at no cost, so we’re relying on different measures to cover operational expenses. Thanks again for reading!

KL Divergence, Simply Explained

AI, But Simple Issue #63

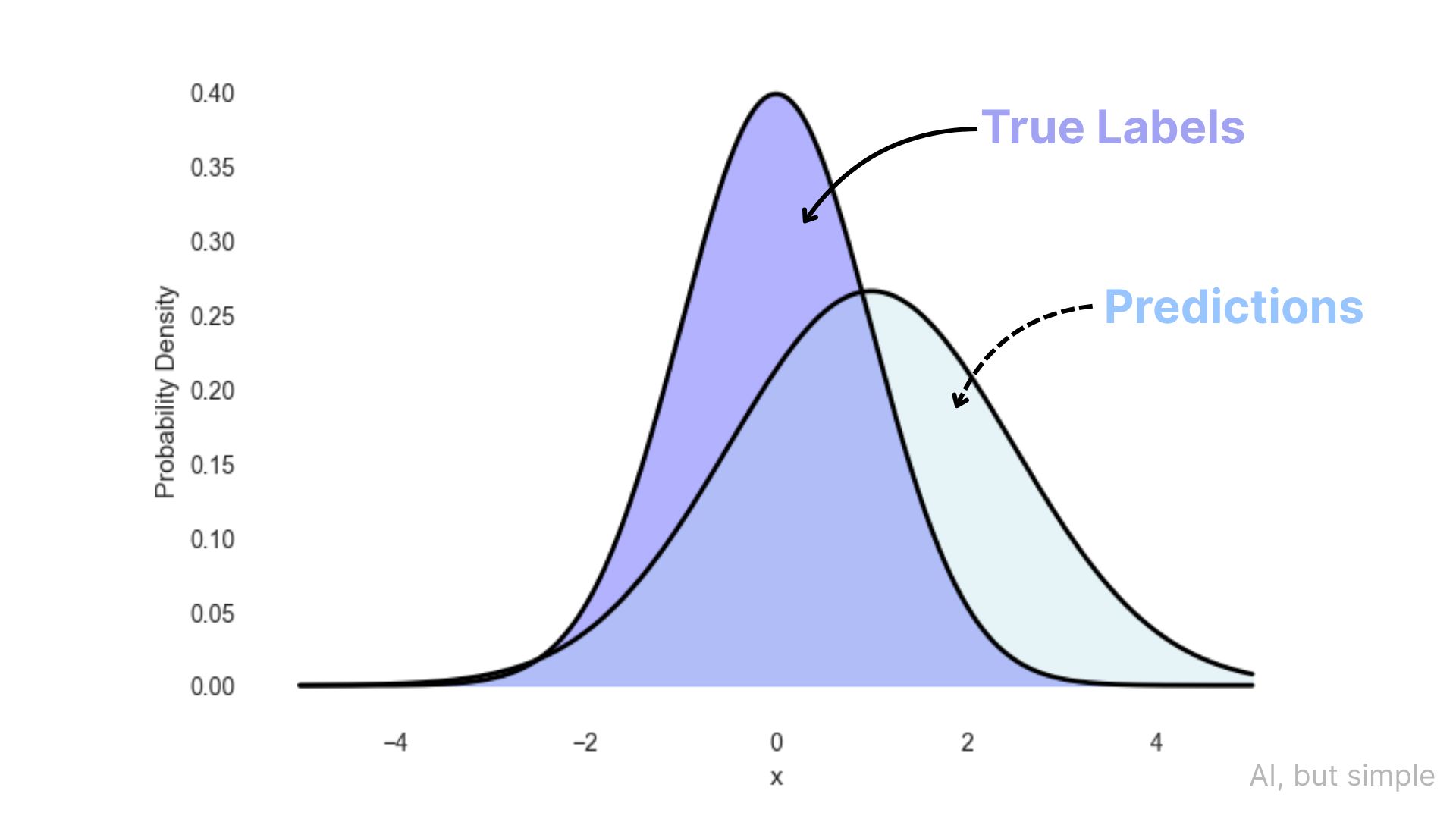

KL Divergence can be simply described as a method used to compare the difference between two probability distributions.

In modern machine learning, this “difference” determines the quality of our predictions.

By comparing the probability distributions of the learned and known true outputs, we determine how “close” or accurate the learned distribution is to the true one.

For instance, two different probability distributions are shown below—the predictions are somewhat off from the true labels.

But how exactly is this difference between distributions measured? In this issue, we will uncover and understand this clearly as we dive into KL divergence and its usage in machine learning.