- AI, But Simple

- Posts

- LLM Knowledge Distillation, Simply Explained

LLM Knowledge Distillation, Simply Explained

AI, But Simple Issue #78

Hello from the AI, but simple team! If you enjoy our content, consider supporting us so we can keep doing what we do.

Our newsletter is no longer sustainable to run at no cost, so we’re relying on different measures to cover operational expenses. Thanks again for reading!

LLM Knowledge Distillation, Simply Explained

AI, But Simple Issue #78

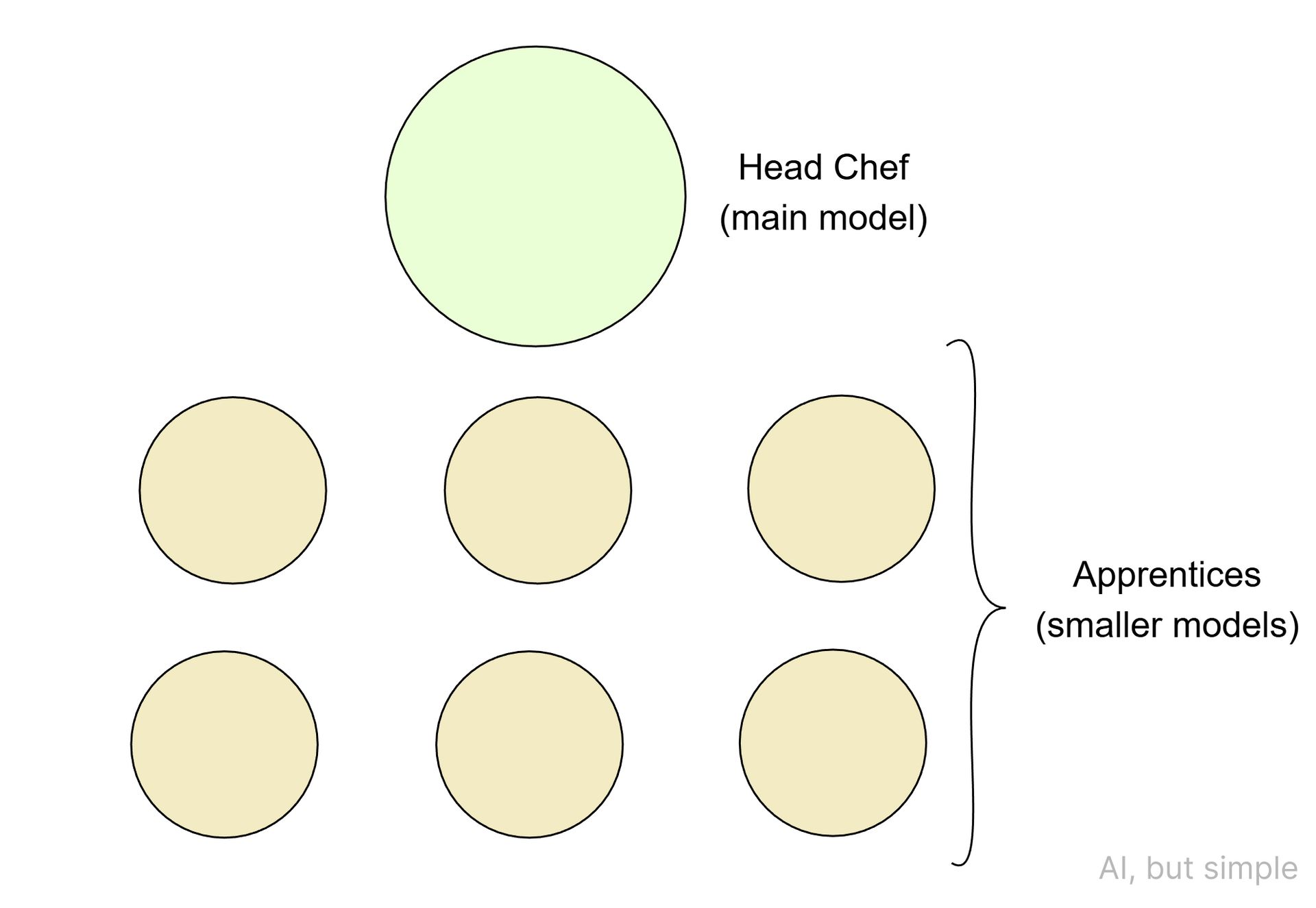

Imagine a restaurant kitchen, where there is one head chef and many of his apprentices. The head chef spent decades perfecting hundreds of his own recipes.

This involved fine-tuning the ratios of his ingredients, testing subtle variations, and experimenting with different spices and techniques until every recipe had a clear formula to be followed.

The apprentices, on the other hand, are young, quick, and eager, but they don’t have the technical expertise or the breadth of knowledge that the head chef possesses.

Over time, the apprentices learn a few specific dishes each from the head chef. They observe the most common patterns and behaviors of the head chef in making these specific dishes and pick up his intuition as well.

Over time, each apprentice is qualified in making a subset of dishes to a quality similar to the head chef’s, but none of them cover the vast array of recipes and techniques that the head chef knows.

This is how knowledge distillation works for large language models (LLMs).

Rather than use an entire powerful but expensive LLM, we can use a smaller model to “mimic” the precise behavior of the LLM. Typically, knowledge distillation is used for more specific use cases, rather than general applicability.

Knowledge distillation was introduced in a 2015 paper by Geoffrey Hinton and his team called “Distilling the Knowledge in a Neural Network” but has only recently started to catch on for LLM applications.

Knowledge distillation can be used to cut compute costs, improve inference speed, and shrink model size. It is a new method to perform a common machine learning technique called transfer learning.