- AI, But Simple

- Posts

- Machine Learning Explainability (XAI), Simply Explained

Machine Learning Explainability (XAI), Simply Explained

AI, But Simple Issue #84

Hello from the AI, but simple team! If you enjoy our content, consider supporting us so we can keep doing what we do.

Our newsletter is no longer sustainable to run at no cost, so we’re relying on different measures to cover operational expenses. Thanks again for reading!

Machine Learning Explainability (XAI), Simply Explained

AI, But Simple Issue #84

As machine learning models become increasingly complex, their decisions often become harder to understand and interpret.

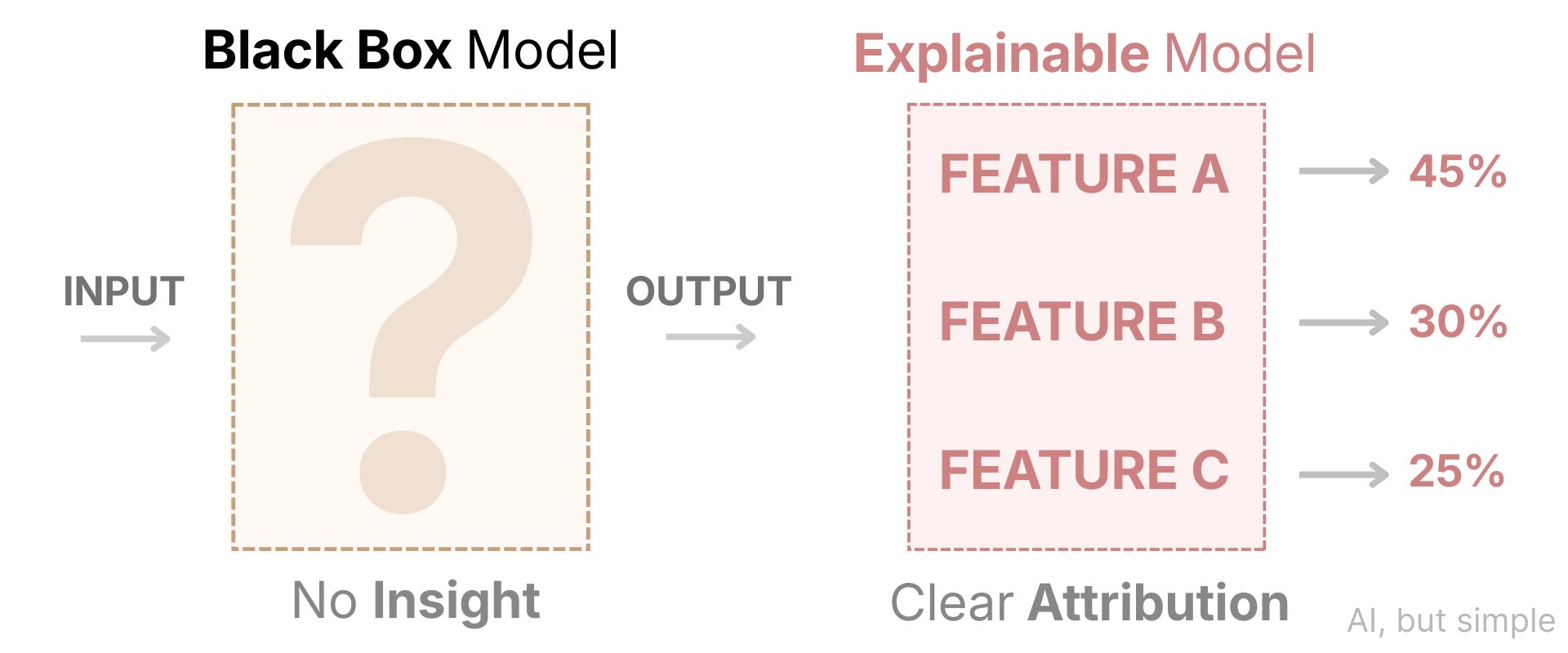

Models like deep neural networks, ensemble methods, and large-scale transformers can achieve remarkable predictive performance, but they mostly operate as black boxes.

The models themselves do not offer much insight into exactly how the inputs are transformed into outputs.

Machine Learning Explainability, commonly referred to as XAI (Explainable Artificial Intelligence), addresses this challenge by providing methods and tools to interpret, understand, and trust the decisions made by machine learning models.