- AI, But Simple

- Posts

- Neural Radiance Fields (NeRFs), Simply Explained

Neural Radiance Fields (NeRFs), Simply Explained

AI, But Simple Issue #76

Hello from the AI, but simple team! If you enjoy our content, consider supporting us so we can keep doing what we do.

Our newsletter is no longer sustainable to run at no cost, so we’re relying on different measures to cover operational expenses. Thanks again for reading!

Neural Radiance Fields (NeRFs), Simply Explained

AI, But Simple Issue #76

Imagine taking a picture in the room you’re in right now. Take one step to the right and snap another. The room in this new picture will look different from the first. Take two steps forward and turn around. Now you’ve got a picture that includes objects and features that may not have been in the first two.

But sometimes looking at a picture isn’t enough; we need more than just one angle to determine what an object or scene truly captures. An extra dimension, perhaps.

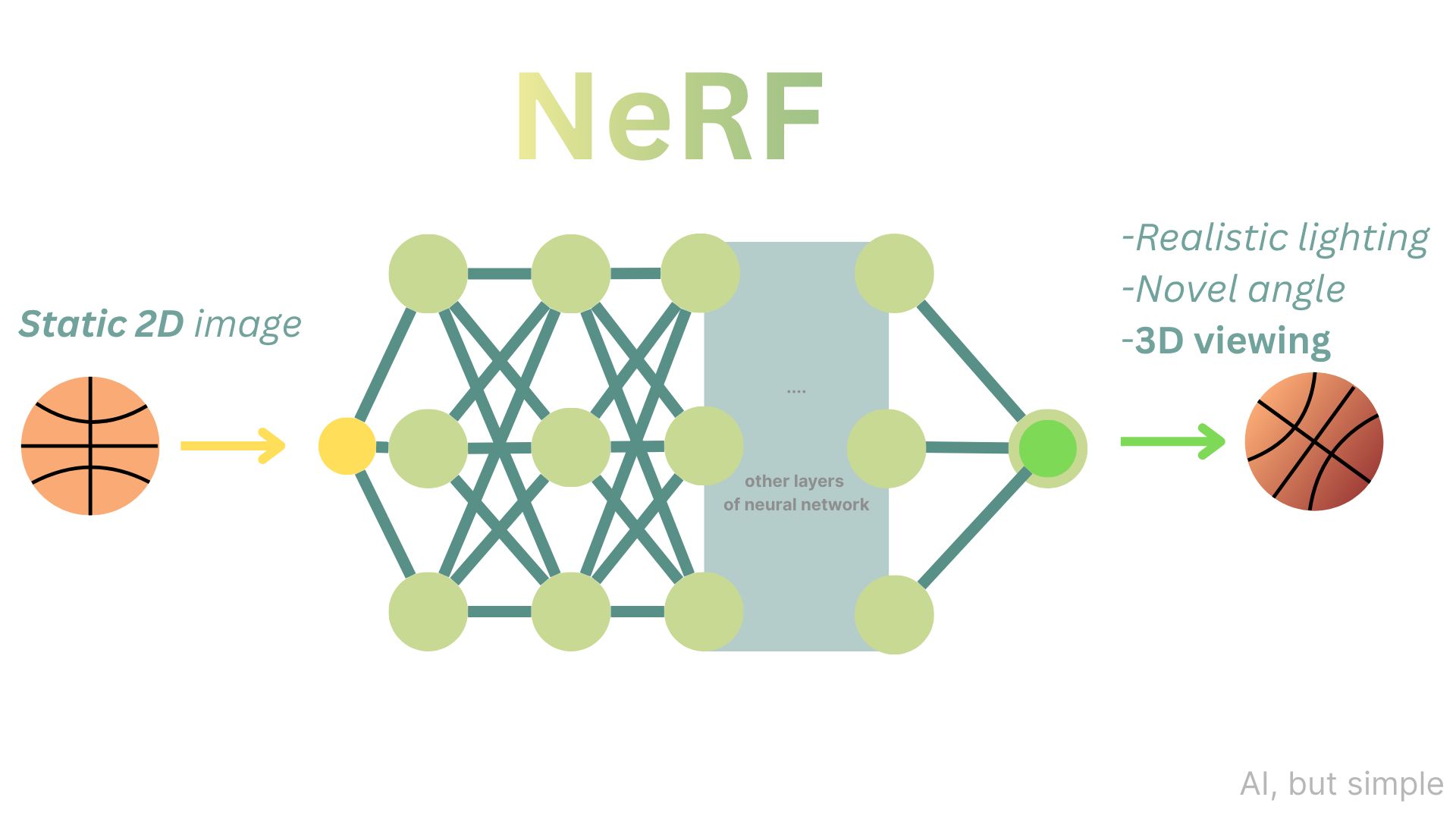

Neural Radiance Fields (NeRFs) actualize this concept, bringing 2D images into 3D spatial features, which was first introduced by a paper written by Ben Mildenhall and his team in 2020.

NeRF learns scenes that were never photographed by utilizing a continuous function that describes how light (radiance) interacts with and passes through every point in a 3D space.