- AI, But Simple

- Posts

- Small Language Models (The Future?)

Small Language Models (The Future?)

AI, But Simple Issue #68

Hello from the AI, but simple team! If you enjoy our content, consider supporting us so we can keep doing what we do.

Our newsletter is no longer sustainable to run at no cost, so we’re relying on different measures to cover operational expenses. Thanks again for reading!

Small Language Models (The Future?)

AI, But Simple Issue #68

In recent times, the popularity of transformer-based LLMs and LLM applications such as AI agents has skyrocketed. Compute is in high demand, while models soar in parameter count—reaching hundreds of billions and trillions of parameters in the largest LLMs.

If you wanted to run your own language model, this would be impossible on consumer-grade hardware. Even small-to-medium sized companies don’t have the budget to train a model of this size.

Luckily, researchers have been moving towards quantization and other techniques to reduce the compute and VRAM needed to store, train, and run models. One of the recent methods in research is to reduce the physical size (in parameters) of the models themselves.

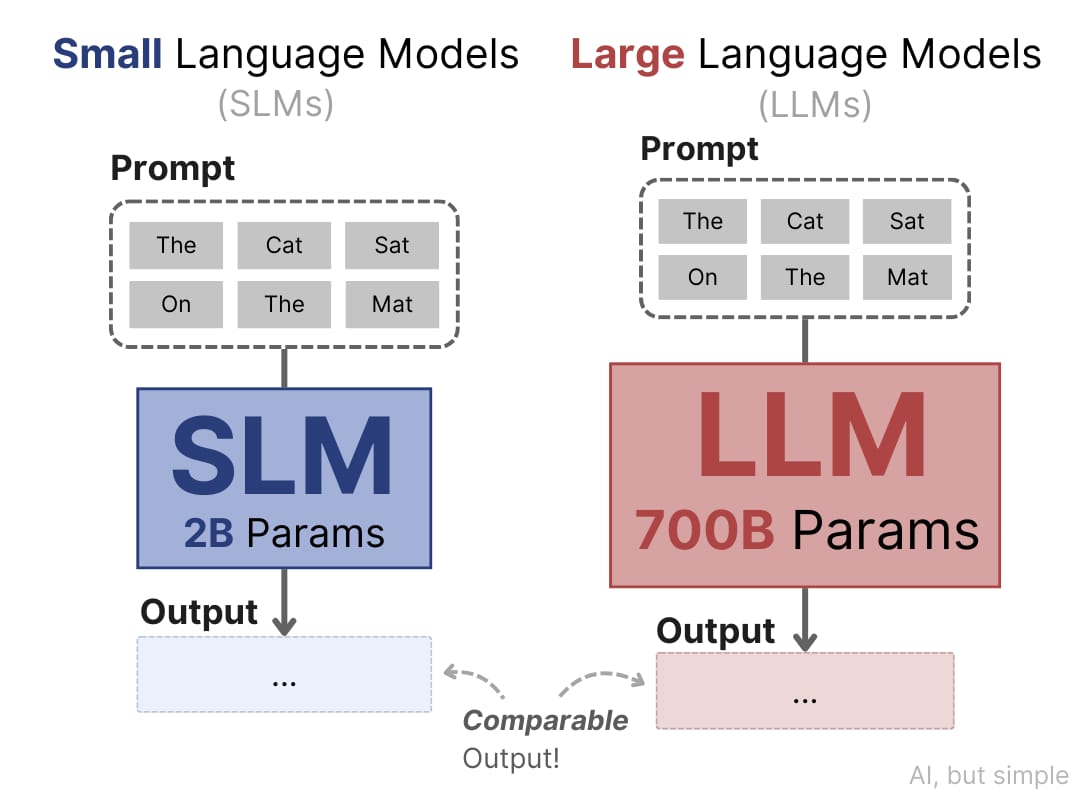

This is where small language models (SLMs) come in. Small language models are neural language models that are much smaller in size (typically billions of parameters or fewer) than today’s massive LLMs (which often have hundreds of billions).

By design, SLMs can run on consumer-grade devices like smartphones, embedded systems, or PCs, offering fast inference and a much lower cost.

Researchers often consider models under about 10 billion parameters to be SLMs, since such models can fit on common hardware with low latency.

SLMs and LLMs share the same basic architecture (typically Transformers) but differ in scale and usage. LLMs excel at general tasks because their huge size lets them memorize broad knowledge—this is exactly why they need tens or hundreds of billions of parameters.