- AI, But Simple

- Posts

- A Deep Dive into Modern Transformers: RoPE, Activation Functions, Quantization

A Deep Dive into Modern Transformers: RoPE, Activation Functions, Quantization

AI, But Simple Issue #86

Hello from the AI, but simple team! If you enjoy our content, consider supporting us so we can keep doing what we do.

Our newsletter is no longer sustainable to run at no cost, so we’re relying on different measures to cover operational expenses. Thanks again for reading!

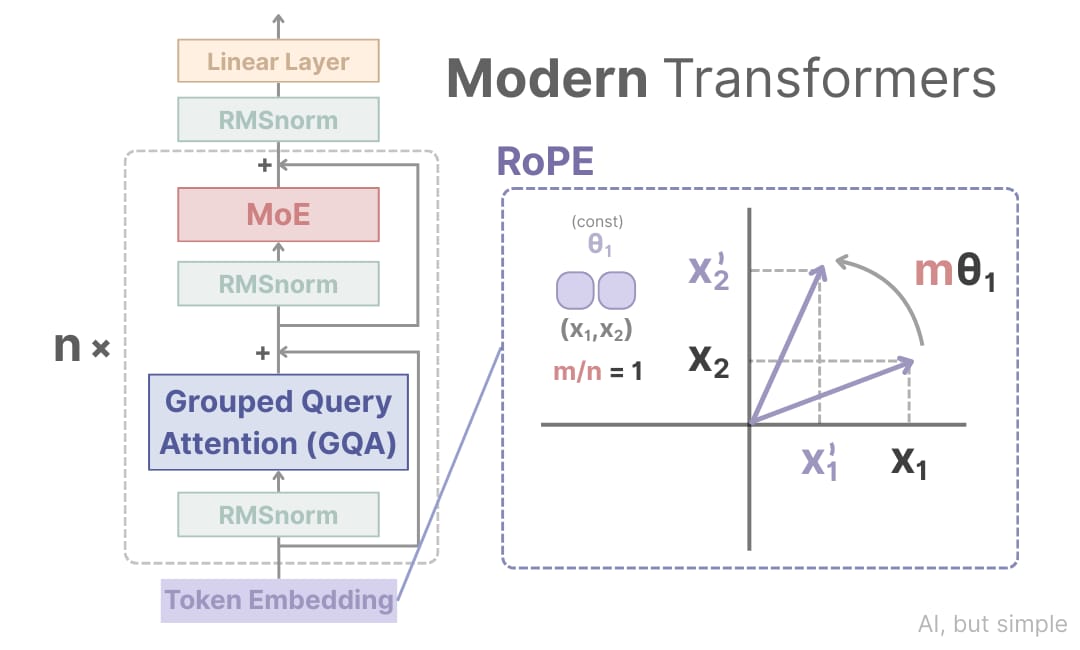

A Deep Dive into Modern Transformers: RoPE, Activation Functions, Quantization

AI, But Simple Issue #86

The core concept of large language models (LLMs) is very widely studied. Feed in a chain of tokens, process them using attention, then obtain a probability distribution over the next possible tokens to sample from.

Most understand how transformers fundamentally work, often through Vaswani et al.’s “Attention is all you need,” released back in 2017.

However, modern transformers used in LLMs like GPT-5, Gemini 3 Pro, and DeepSeek R1 have undergone significant updates and optimizations.

In this issue, we’ll dive deep into these changes, why they happen, and how they improve performance.

Before we continue, let’s unpack some general terms we should understand:

Perplexity, the metric used to measure how “confused” an LLM is by the text it sees. A low perplexity shows confidence, while a high perplexity shows confusion.

Mathematically, perplexity is Euler’s number (e) raised to the exponent of cross-entropy loss. If a model has a perplexity of 10, it means that, on average, the model is guessing from 10 equally likely words.

Length generalization, how a transformer or LLM may generalize (“perform well”) to longer sequences than it was trained on with the correct positional encoding.

This is part two of another “modern transformers” article. Although both can be read standalone, the other article provides some useful context. If you haven’t read it, you can find it here: